spdk环境搭建

本来21年就写了这篇博客,但因为在博客中放了vmware的密钥,违规了,最近正好又要用到spdk,就重新搭建一下spdk,简单改一下博客再发一遍

运行环境

VMware16+Ubuntu21.04

Ubuntu下载地址:https://repo.huaweicloud.com/ubuntu-releases/

安装后记得换源

源码拉取

1 | |

这一次我没有遇到下载速度慢的问题,,直接运行以上命令就成功了

下载速度慢的可能解决方案

可以将https改成git,或者在com后加入.cnpmjs.org后缀。

1 | |

git clone命令完成后,修改spdk文件夹中的.gitmodules文件

1 | |

最后再执行

1 | |

git相关策略

- 关闭电脑代理,重置git代理

1

2git config --global --unset http.proxy

git config --global --unset https.proxy - 修改hosts文件,添加github与ip地址映射 https://github.com/521xueweihan/GitHub520

- 重启网络或主机

不过最后还是重启比较有效,git主打一个运气

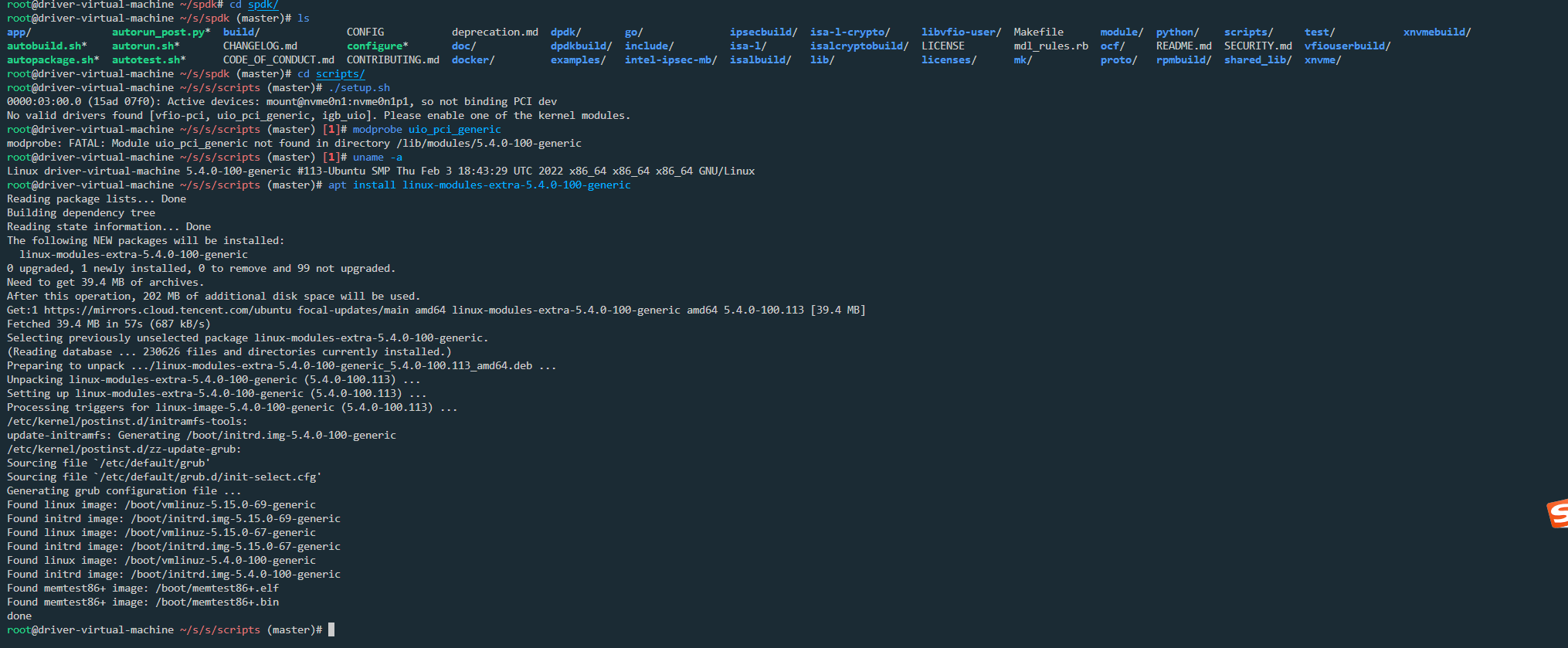

编译

1 | |

增加虚拟盘,运行样例

在运行SPDK应用程序之前,必须分配一些大页面,并且必须从本机内核驱动程序中取消绑定任何NVMe和I / OAT设备。SPDK包含一个脚本,可以在Linux和FreeBSD上自动执行此过程。该脚本应该以root身份运行。它只需要在系统上运行一次。

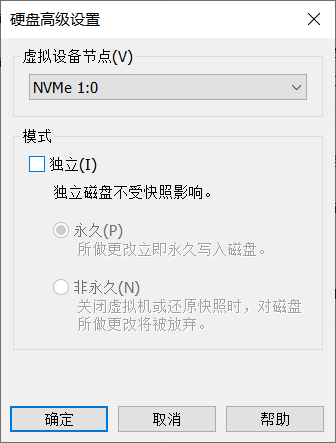

在VMware上增加一个未格式化NVMe硬盘

注意要是新的硬盘设备(x:0)

不需要任何分区,挂载,格式化操作,裸盘即可

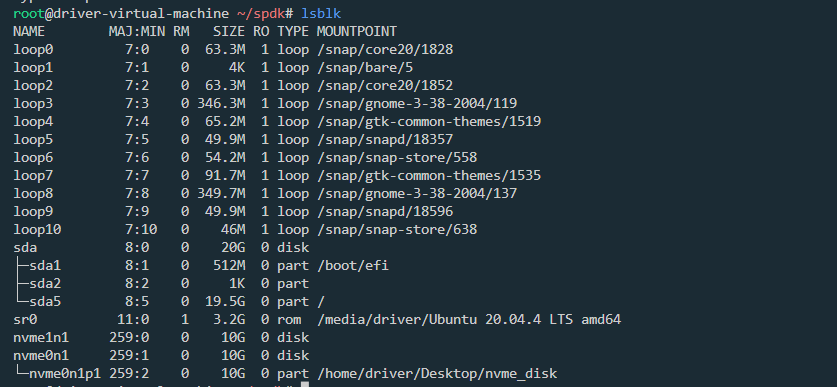

添加完之后用lsblk命令查看是否添加成功(nvme1n1)

而后运行

1 | |

问题: No valid drivers found [vfio-pci, uio_pci_generic, igb_uio]. Please enable one of the kernel modules.

由于虚拟机切换过内核,有些模块没装,故装一下extra-module

docker ubuntu镜像中 缺少uio驱动和uio_pci_generic驱动的问题

绑定与解绑操作,HUGEMEM默认是2G

1 | |

1 | |

hello world代码简要解析

建议先简要阅读spdk官方文档中文版,转换成pdf更好读一点

为了看更多的输出,使能debug模式

1 | |

1 | |

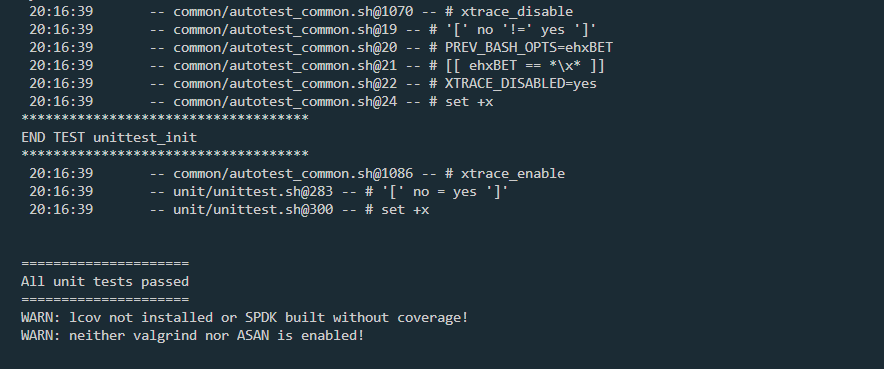

看这个debug输出可以知道,spdk的初始化非常复杂,所以我只是简单分析以下打印的过程,也就是hello_world函数

1 | |

我的相关文章:NVMe驱动 请求路径学习记录

各个函数中省略了大部分代码,可使用gdb自行查看函数调用关系

申请队列空间

1 | |

1 | |

1 | |

发送admin 命令,创建SQ/CQ

1 | |

1 | |

相关代码

1 | |

申请DMA缓冲区

1 | |

1 | |

PRP处理

然后prp相关的两行输出

1 | |

建议阅读:

SSD NVMe核心之PRP算法

NVM-Express-Base-Specification中Figure 87: Common Command Format

1 | |

gdb打印相关信息

1 | |

输出中打印的虚拟地址与物理地址就是sequence.buf的地址,长度为512是因为spdk_nvme_ns_cmd_write中的lba_count是以sector计数的,一个sector即为512字节

在函数调用中有两次利用了函数指针跳转

1 | |

1 | |

数据收发流程

hello_world函数的执行流程大致如下:

1 | |

读写命令是类似的,故只分析发送写命令到调用写回调函数的过程

1 | |

由《深入浅出SSD》 6.5节trace分析可知,主机读请求的执行流程如下:

- 主机准备好命令放在SQ

- 主机通过写SQ的Tail DB,通知SSD来取命令(Memory Write TLP)

- SSD收到通知,去主机端的SQ取指令(Memory Read TLP)

- SSD执行命令,把数据传给主机(Memory Write TLP)

- SSD往主机的CQ中返回状态

- SSD采用中断的方式告诉主机去处理CQ

- 主机处理相应CQ,更新CQ Head DB(Memory Write TLP)

主机相关的三行代码如下:

1 | |

相关代码

1 | |

1 | |

spdk github地址:

https://github.com/spdk/spdk

spdk官方文档中文版

英文官网地址:

https://spdk.io/doc/

并发编程中的futrue和promise,你了解多少?

configure、 make、 make install 背后的原理(翻译)